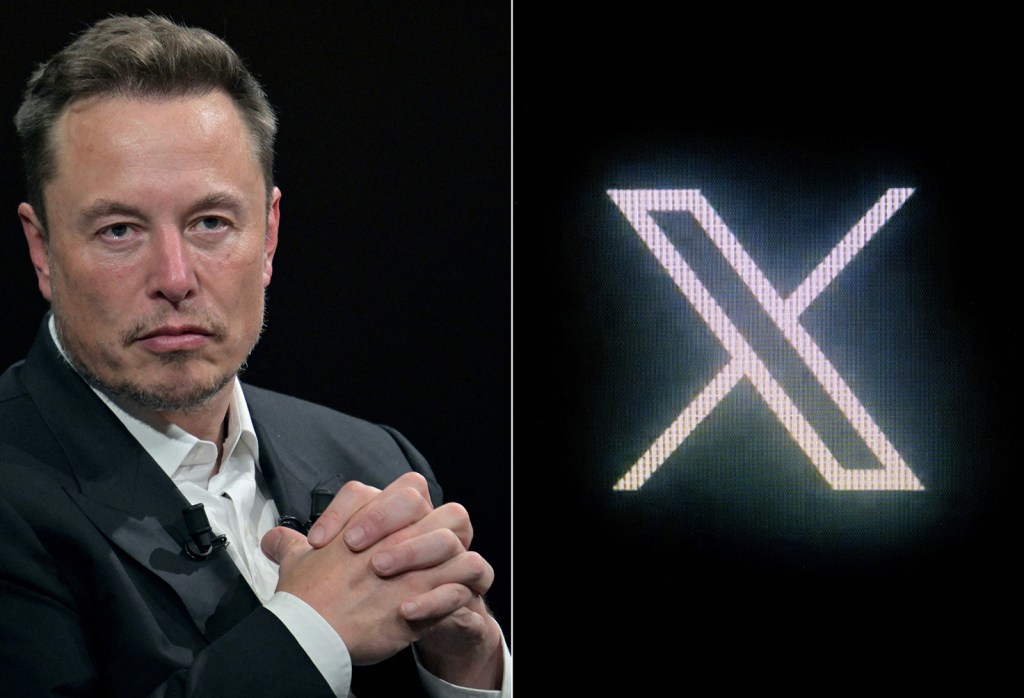

Elon Musk’s transformation of Twitter into the more free-for-all X is the most dramatic case, but other platforms are also changing their approach to monitoring. Meta Platforms Inc. has sought to downplay news and political content on Facebook, Instagram and its new Threads app. Google’s YouTube has decided that purging falsehoods about the 2020 election restricts too much political speech (Meta has a similar policy).

The shift is happening just as artificial intelligence tools offer new and accessible ways to supercharge the spread of false content — and deepening social divisions mean there’s already less trust. In Davos, where global leaders gathered this week, the World Economic Forum ranked misinformation as the biggest short-term danger in its Global Risks Report.

While platforms still require more transparency for advertisements, organic disinformation that spreads without paid placement is a “fundamental threat to American democracy,” especially as companies reevaluate their moderation practices, says Mark Jablonowski, chief technology officer for Democratic ad-tech firm DSPolitical.

“As we head into 2024, I worry they are inviting a perfect storm of electoral confusion and interference by refusing to adequately address the proliferation of false viral moments,” Jablonowski said. “Left uncorrected they could take root in the consciousness of voters and impact eventual election outcomes.”

Risky Year

With elections in some 60 other countries besides the U.S., 2024 is a risky year to be testing the new dynamic.

The American campaign formally got under way last week as former President Donald Trump scored a big win in the Iowa caucus — a step toward the Republican…

Read the full article here